Principle and configuration of 7 bond modes for multiple NICs. Nic bonding mode configuration in linux.

What is a network card bond?

The so-called bond, is to bind multiple physical network cards into a logical network card, using the same IP to work, in the increase of bandwidth can also improve redundancy, generally used more is to improve redundancy, respectively and different switches connected, improve reliability, but sometimes server bandwidth is not enough can also be used to increase bandwidth.

Linux NIC configuration – Nic bonding mode configuration in linux

About Bond Introduction

There are seven types of NIC binding modes (0~6), namely: bond0, bond1, bond2, bond3, bond4, bond5, bond6.

There are three types that we often use in our daily work:

mode=0: Balanced load mode, with automatic redundancy, but requires “Switch” support and settings.

mode=1: In the automatic redundancy mode, if one line is disconnected, the other lines will be automatically redundant.

mode=6: Balanced load mode, with automatic redundancy, no “Switch” support and setting required.

NIC bonding mode

Bond’s seven modes are introduced:

1, mode=0 (balance-rr) (balance cycle strategy)

Link load balancing, increasing bandwidth, and supporting fault tolerance, one link failure will automatically switch over the normal link.

The switch needs to configure an aggregation port, which Cisco calls port channel.

Features: The transmission packet sequence is transmitted sequentially (ie: the first packet goes eth0, the next packet goes eth1….and the cycle goes on until the last transmission is completed), this mode provides load balancing and fault tolerance; But we know if a connection

Or if the session packets are sent from different interfaces and pass through different links in the middle, there is likely to be a problem of out-of-order arrival of packets on the client, and out-of-order arriving packets need to be sent again, so that the throughput of the network will decrease.

2, mode=1 (active-backup) (primary-backup policy)

This is the active and standby mode, only one network card is active, the other is standby standby, all traffic is processed on the active link, the switch configuration is bundled will not work, because the switch sends packets to two network cards, half of the packets are dropped.

Features: Only one device is active, and when one goes down the other it is immediately converted from backup to primary.

The MAC address is externally visible, and from the outside, the bond’s MAC address is unique to avoid clutter in the switch.

Play with Linux – Linux NIC teaming mode

This mode only provides fault tolerance; It can be seen that the advantage of this algorithm is that it can provide high network connection availability, but its resource utilization is low, only one interface is active, and in the case of N network interfaces, the resource utilization is 1/N.

3, mode=2 (balance-xor) (balance strategy)

NIC teaming mode 3. Indicates XOR Hash load sharing, which cooperates with the aggregation enforcement non-negotiation mode of the VSwitch. (xmit_hash_policy required, switch configuration port channel required)

Features: Transmit packets based on the specified transport hash policy. The default policy is: (source MAC address XOR destination MAC address) % slave number. Additional transport policies can be specified through xmit_hash_policy options, and this mode provides load balancing and fault tolerance.

4, mode=3 (broadcast) (broadcast policy)

It means that all packets are sent from all network interfaces, which is unbalanced, only redundant, but too wasteful of resources. This mode is suitable for the financial industry because they need a highly reliable network that does not allow any problems. It is necessary to cooperate with the aggregation enforcement non-negotiation mode of the VSwitch.

Features: Each packet is transmitted on each slave interface, this mode provides fault tolerance.

5, mode=4 (802.3ad) (IEEE 802.3ad dynamic link aggregation)

Indicates that the 802.3ad protocol is supported, and the aggregated LACP mode of the switch is coordinated (xmit_hash_policy required).

The standard requires all devices to operate in the same rate and duplex mode when aggregating, and, as with all bonding load balancing modes except balance-rr mode, no connection can use more than the bandwidth of one interface.

Features: Create an aggregation group that shares the same rate and duplex settings. Multiple slaves work under the same activated aggregate according to the 802.3ad specification.

Slave election for outbound traffic is based on the transport hash policy, which can be changed from the default XOR policy to another policy by xmit_hash_policy options.

It is important to note that not all transport policies are adapted to 802.3ad, especially given the packet out-of-order problem mentioned in section 43.2.4 of the 802.3ad standard. Different implementations may have different adaptations.

Linux NIC bonding

Prerequisite:

Condition 1: ethtool supports obtaining the rate and duplex settings of each slave.

Condition 2: The switch supports IEEE 802.3ad Dynamic link aggregation.

Condition 3: Most switches require specific configuration to support 802.3ad mode.

6, mode=5 (balance-tlb) (adapter transmission load balancing)

According to the load of each slave, the slave is selected for transmission, and the slave that is currently the turn is used when it is received.

This mode requires some kind of ethtool support for the network device driver of the slave interface; And ARP monitoring is not available.

Features: Channel bonding that does not require any special switch support.

Distribute outbound traffic on each slave based on the current load (calculated based on speed). If the slave that is receiving data fails, another slave takes over the MAC address of the failed slave.

Prerequisite:

ethtool supports getting the rate of each slave.

7, mode=6 (balance-alb) (adapter adaptive load balancing)

Added RLB (Receive Load Balance) to the TLB of 5 (mode=4).

No switch support is required. Receive load balancing is achieved through ARP negotiation.

Linux NIC bonding – Nic bonding mode configuration in linux

Note: mode5 and mode6 do not require switch-side settings, and the NICs can be automatically aggregated. mode4 needs to support 802.3ad. mode0, mode2, and mode3 theoretically require static aggregation.

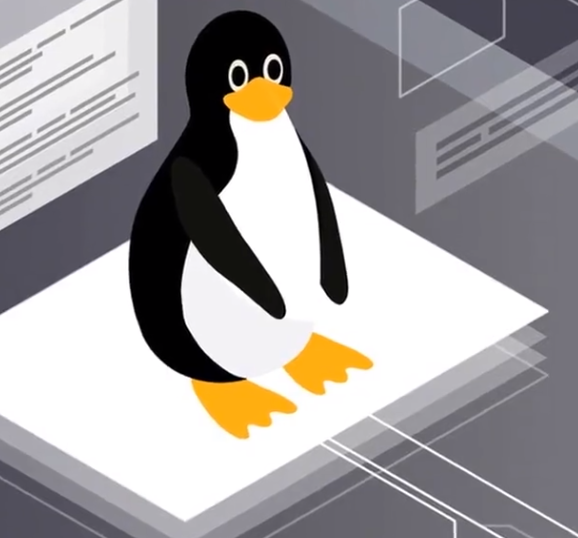

Linux NIC teaming configuration

Environment:

System: Centos7

Network card: em1, em2

Load mode: mode0 (balanced cycle strategy)

#Load the bonding kernel

echo "alias bond0 bonding" > /etc/modprobe.d/bond.conf

echo "options bond0 miimon=100 mode=0" >> /etc/modprobe.d/bond.conf

nmcli c reload #The overload takes effect

1. Configure a physical NIC

[root@lixin network-scripts]#cat ifcfg-eth0

DEVICE=eth0

TYPE=Ethernet

ONBOOT=yes

BOOTPROTO=none

MASTER=bond0

USERCTL=no

SLAVE=yes //Without this field, you need to boot the ifenslave bond0 eth0 eth1 command.

[root@lixin network-scripts]#cat ifcfg-eth1

DEVICE=eth1

TYPE=Ethernet

ONBOOT=yes

BOOTPROTO=none

MASTER=bond0

SLAVE=yes

USERCTL=no

[root@lixin network-scripts]#

2、Configure the logical NIC bind0

[root@lixin network-scripts]#cat ifcfg-bond0 //We need to create it by hand

DEVICE=bond0

BOOTPROTO=none

ONBOOT=yes

TYPE=Ethernet

IPADDR=192.168.1.3

GATEWAY=192.168.1.1

PREFIX=24

DEFROUTE=yes

USERCTL=no

BONDING_OPTS="mode=0 miimon=100"

DNS1=199.29.29.29

NDS2=223.5.5.5

#Since there is no such network card file, we can create ifcfg-bond0 configuration file through vim

[root@lixin network-scripts]#

3. Restart the network

systemctl restart network

Close the NetworkManager service

systemctl stop NetworkManager

systemctl disable NetworkManager

4. Detection

[root@localhost sysconfig]# cat /proc/net/bonding/bond0

Ethernet Channel Bonding Driver: v3.7.1 (April 27, 2011)

Bonding Mode: load balancing (round-robin)

MII Status: up

MII Polling Interval (ms): 100

Up Delay (ms): 0

Down Delay (ms): 0

Slave Interface: eth0

MII Status: up

Speed: 1000 Mbps

Duplex: full

Link Failure Count: 0

Permanent HW addr: 18:66:da:4d:c3:e8

Slave queue ID: 0

Slave Interface: eth1

MII Status: up

Speed: 1000 Mbps

Duplex: full

Link Failure Count: 0

Permanent HW addr: 18:66:da:4d:c3:e7

Slave queue ID: 0